Preface

The last post illustrated setting up a basic RabbitMQ federation. That post used the basic guest account when connecting similarly named virtual hosts on the downstream to the upstream. It's also possible to use different users and connect different virtual hosts.

Connections

The picture below is a shot of the connections on the upstream broker. It shows that there is a connection to the FederationDemo virtual host. You can see that it is the guest user which is connected.

The credentials are specified in the URI used when creating the upstream. Just below the 'Add new upstream' panel is a panel with different examples. These show how use various credentials.

When different credentials are supplied in the URI, the federation is created with a different user. It holds that the user must have access to the upstream virtual host. Thus, an upstream created with a URI like amqp://FederatedUser:user@RABBIT/FederationDemo opens the following connection:

That's It

Connecting with different credentials is easy. You can specify a different user, or a different virtual host.

Tuesday, September 17, 2013

Friday, September 13, 2013

Getting Started with RabbitMQ Federations and EasyNetQ

Preface

One of the uses we needed out of RabbitMQ was passing messages between two separate departments. These departments each had their own Rabbit cluster. They each had their own LAN. This led us down the path of using a federated exchange to transport messages between the clusters.

The source code is available on GitHub.

Stuff Used

RabbitMQ is very flexible. There are always about a dozen ways to solve the same problem. This is an example of how to use topic-based routing across a federated exchange with EasyNetQ as a client library. This is not meant to be the one true way of doing it.

About Federations

A federation allows messages to flow from one RabbitMQ cluster to one or more downstream clusters. The simplest RabbitMQ federation exists between two clusters: an upstream and a downstream. The downstream cluster can be thought of as subscribing to messages from the upstream cluster.

Getting Started

Downstream (or Beta)

The downstream cluster will be receiving messages from the upstream server. There are a few things which have to be set up on the downstream cluster:

Define the Policy

A good place to start is by defining the policy. Policies are managed in the Admin->Policies screen. It’s important that the correct virtual host is selected. You’ll also want to make note of the pattern you use.

Define the Upstream

Next up is defining the address of the upstream cluster. This can be done in the Admini->Federation Upstreams screen. Again, be sure to have the correct virtual host selected.

Message Queues and Exchanges

The easiest way to create the queues and exchanges is to let EasyNetQ do it for us. Subscribing to a message with EasyNetQ causes it to create the appropriate Queues and Exchanges. It also binds the Exchanges to the Queues. After running the subscriber, create an excahnge named ‘fed.exchange’. The policy should be applied if the exchange name matches the pattern.

The subscriber code would be similar to the following:

Once the federated exchange has been created, it will need to be bound to the message exchanges. Since we’re using topics to route the various messages, we’ll want to ensure that the topics are passed through. For now, we’ll just use a ‘#’ to indicate that all topics should be passed through. The bindings should then look like the following image:

Upstream (or Alpha)

Setting up the upstream is pretty simple. The virtual host needs to be created. It needs the appropriate users assigned to it. We’ll be using the default guest account for this demo. If the guest account isn’t granted access to the virtual host, then the the downstream cluster will not be able to establish a connection.

As with the subscriber, EasyNetQ can create the exchanges for us. Messages will be discarded, until the exchanges are bound to something. Fortunately, the outbound exchange will have been created when the downstream cluster connects.

The publishing code looks like this:

Here, we’re going to bind the exchanges to the outbound federated exchange. We’ll want to bind the upstream clusters exchanges to the federated exchange. It’s important to remember the ‘#’ as a routing key. These bindings should be similar to the following picture.

Running the Apps

When the apps are run, you can see that the messages are transported from the publisher to the client. The publisher sends them to the upstream cluster. The upstream cluster then dispatches them to the downstream cluster. The downstream cluster then passes them to the subscriber.

Note that the highlighted bits show the messages were translated into the correct types:

Wrapping It Up

That’s the basics of getting a federation up and running between two clusters. The federated exchange is capable of transporting two different types of messages. Those messages are routed to the correct subscriber, based on the topic of the message.

One final note… There are a lot of other considerations when federating clusters and using topic-based routing. This blog represents the very basics. You’ll want to make sure you’ve familiarized yourself with the subject.

One of the uses we needed out of RabbitMQ was passing messages between two separate departments. These departments each had their own Rabbit cluster. They each had their own LAN. This led us down the path of using a federated exchange to transport messages between the clusters.

The source code is available on GitHub.

Stuff Used

- Two RabbitMQ clusters.

- Management plugin.

- Federation plugin.

- EasyNetQ client library.

- Similarly named virtual hosts on each cluster (FederationDemo is used in this example).

RabbitMQ is very flexible. There are always about a dozen ways to solve the same problem. This is an example of how to use topic-based routing across a federated exchange with EasyNetQ as a client library. This is not meant to be the one true way of doing it.

About Federations

A federation allows messages to flow from one RabbitMQ cluster to one or more downstream clusters. The simplest RabbitMQ federation exists between two clusters: an upstream and a downstream. The downstream cluster can be thought of as subscribing to messages from the upstream cluster.

Getting Started

Downstream (or Beta)

The downstream cluster will be receiving messages from the upstream server. There are a few things which have to be set up on the downstream cluster:

- An upstream address.

- A policy to identify the federated exchange.

- An exchange to be federated in the virtual host.

- Exchanges for the messages bound to the subscriber.

Define the Policy

A good place to start is by defining the policy. Policies are managed in the Admin->Policies screen. It’s important that the correct virtual host is selected. You’ll also want to make note of the pattern you use.

Define the Upstream

Next up is defining the address of the upstream cluster. This can be done in the Admini->Federation Upstreams screen. Again, be sure to have the correct virtual host selected.

Message Queues and Exchanges

The easiest way to create the queues and exchanges is to let EasyNetQ do it for us. Subscribing to a message with EasyNetQ causes it to create the appropriate Queues and Exchanges. It also binds the Exchanges to the Queues. After running the subscriber, create an excahnge named ‘fed.exchange’. The policy should be applied if the exchange name matches the pattern.

The subscriber code would be similar to the following:

public void Run()

{

bus.Subscribe<VisaTransaction>("Beta", PrintTransaction, configuration => configuration.WithTopic(typeof(VisaTransaction).Name));

bus.Subscribe<MasterCardTransaction>("Beta", PrintTransaction,

configuration => configuration.WithTopic(typeof(MasterCardTransaction).Name));

SpinWait.SpinUntil(() => Console.ReadKey().Key == ConsoleKey.Escape);

}

Once the federated exchange has been created, it will need to be bound to the message exchanges. Since we’re using topics to route the various messages, we’ll want to ensure that the topics are passed through. For now, we’ll just use a ‘#’ to indicate that all topics should be passed through. The bindings should then look like the following image:

Upstream (or Alpha)

Setting up the upstream is pretty simple. The virtual host needs to be created. It needs the appropriate users assigned to it. We’ll be using the default guest account for this demo. If the guest account isn’t granted access to the virtual host, then the the downstream cluster will not be able to establish a connection.

As with the subscriber, EasyNetQ can create the exchanges for us. Messages will be discarded, until the exchanges are bound to something. Fortunately, the outbound exchange will have been created when the downstream cluster connects.

The publishing code looks like this:

private void PublishMessage<T>() where T : Transaction

{

var message = Builder<T>.CreateNew().Build();

using (var channel = bus.OpenPublishChannel())

channel.Publish(message, configuration => configuration.WithTopic(typeof(T).Name));

}

Here, we’re going to bind the exchanges to the outbound federated exchange. We’ll want to bind the upstream clusters exchanges to the federated exchange. It’s important to remember the ‘#’ as a routing key. These bindings should be similar to the following picture.

Running the Apps

When the apps are run, you can see that the messages are transported from the publisher to the client. The publisher sends them to the upstream cluster. The upstream cluster then dispatches them to the downstream cluster. The downstream cluster then passes them to the subscriber.

Note that the highlighted bits show the messages were translated into the correct types:

Wrapping It Up

That’s the basics of getting a federation up and running between two clusters. The federated exchange is capable of transporting two different types of messages. Those messages are routed to the correct subscriber, based on the topic of the message.

One final note… There are a lot of other considerations when federating clusters and using topic-based routing. This blog represents the very basics. You’ll want to make sure you’ve familiarized yourself with the subject.

Thursday, August 22, 2013

RabbitMQ connection_closed_abruptly error

Preface

One of the hiccups we encountered when starting with RabbitMQ occurred when we put a proxy in front of our cluster. The proxy began probing the broker's nodes to ensure they were alive. This resulted in a lot of spam in the logs:

A Typical(?) Cluster

I'm not certain there is a typical setup for a RabbitMQ cluster. In our case, we went with what we've been calling a binary star. We took this phrase from the 0mq docs. The idea is pretty simple: it's an active/active or active/passive pair with mirrored queues.

A proxy is placed between the clients and the binary star. This separates the clients from the cluster implementation. The biggest benefit for is is the consistent IP for clients. This simplifies the client configurations. It also allows us to perform maintenance on the cluster w/o interrupting service.

RabbitMQ.config

The default Windows location for the config file, rabbitmq.config, is in the %appdata%/RabbitMQ directory. It's possible to change this location, but we'll assume the default location for now. A clean install will often not have this config file.

The first step is to create the rabbitmq.config file. You can create this in any text editor. Just remember that it must be named rabbitmq.config. It may not have a .txt or any other extension. Add the following to the file, and save it:

This will configure the connection logging to only log errors. The other types of logging, like start up messages, will be unaffected by this.

Making It Take Affect

The RabbitMQ documents indicate that the service should be restarted for the new config to take affect. We have found it necessary to re-install the broker. Fortunately, re-installation of the broker did not wipe the setup. The steps are as follows:

It's also possible to enable TCP keepalive support, by adding the appropriate line (#11):

Wrap Up

The big gotcha was having to re-install the RabbitMQ service to get the .config file changes to stick. Otherwise, this was a basic config change to ease up on the logging. Hopefully, this will help someone else...

One of the hiccups we encountered when starting with RabbitMQ occurred when we put a proxy in front of our cluster. The proxy began probing the broker's nodes to ensure they were alive. This resulted in a lot of spam in the logs:

=INFO REPORT==== 21-Aug-2013::11:14:32 === accepting AMQP connection <0.12744.35> (<proxy ip>:55613 -> <rabbit ip>:5672) =WARNING REPORT==== 21-Aug-2013::11:14:32 === closing AMQP connection <0.12744.35> (<proxy ip>:55613 -> <rabbit ip>:5672): connection_closed_abruptly

A Typical(?) Cluster

I'm not certain there is a typical setup for a RabbitMQ cluster. In our case, we went with what we've been calling a binary star. We took this phrase from the 0mq docs. The idea is pretty simple: it's an active/active or active/passive pair with mirrored queues.

A proxy is placed between the clients and the binary star. This separates the clients from the cluster implementation. The biggest benefit for is is the consistent IP for clients. This simplifies the client configurations. It also allows us to perform maintenance on the cluster w/o interrupting service.

RabbitMQ.config

The default Windows location for the config file, rabbitmq.config, is in the %appdata%/RabbitMQ directory. It's possible to change this location, but we'll assume the default location for now. A clean install will often not have this config file.

The first step is to create the rabbitmq.config file. You can create this in any text editor. Just remember that it must be named rabbitmq.config. It may not have a .txt or any other extension. Add the following to the file, and save it:

[

{rabbit, [

{log_levels,[{connection, error}]},

]}

].

This will configure the connection logging to only log errors. The other types of logging, like start up messages, will be unaffected by this.

Making It Take Affect

The RabbitMQ documents indicate that the service should be restarted for the new config to take affect. We have found it necessary to re-install the broker. Fortunately, re-installation of the broker did not wipe the setup. The steps are as follows:

- Stop the service in the services management screen.

- Uninstall RabbitMQ.

- Install RabbiMQ.

- Verify the service is running.

More RabbitMQ.config

It's also possible to enable TCP keepalive support, by adding the appropriate line (#11):

[

{rabbit, [

{log_levels,[{connection, error}]},

{tcp_listen_options,

[binary,

{packet,raw},

{reuseaddr,true},

{backlog,128},

{nodelay,true},

{exit_on_close,false},

{keepalive,true}]}

]}

].

Wrap Up

The big gotcha was having to re-install the RabbitMQ service to get the .config file changes to stick. Otherwise, this was a basic config change to ease up on the logging. Hopefully, this will help someone else...

Wednesday, August 14, 2013

Getting Started With RabbitMQ

Preface

I was tasked with giving a quick RabbitMQ intro to another development group. This post is the result of that task. Most of this stuff will be regurgitated from other sources on the web. It's just meant to help people get up and running.

First we'll look at installing RabbitMQ. Next we'll do some basic configuration, and take a quick peek at the management plug-in. Finally, we'll build a couple simple console apps to use the broker.

RabbitMQ is based on Erlang. There is a Wikipedia article which summarizes what RabbitMQ is. There's also a Google Tech Talk video on YouTube.

This article will assume that you know how to use Visual Studio: create solutions, add projects to solutions, etc. It will also assume that you are familiar with using NuGet.

Getting Ready

Before doing the exercises in this tutorial, you'll need to grab the Erlang and RabbitMQ installers.

Installers

Tools Used

I was tasked with giving a quick RabbitMQ intro to another development group. This post is the result of that task. Most of this stuff will be regurgitated from other sources on the web. It's just meant to help people get up and running.

First we'll look at installing RabbitMQ. Next we'll do some basic configuration, and take a quick peek at the management plug-in. Finally, we'll build a couple simple console apps to use the broker.

RabbitMQ is based on Erlang. There is a Wikipedia article which summarizes what RabbitMQ is. There's also a Google Tech Talk video on YouTube.

This article will assume that you know how to use Visual Studio: create solutions, add projects to solutions, etc. It will also assume that you are familiar with using NuGet.

Getting Ready

Before doing the exercises in this tutorial, you'll need to grab the Erlang and RabbitMQ installers.

Installers

Tools Used

- Visual Studio 2012

- NuGet

- EasyNetQ - v0.11.1.104 was used.

- Windows 7 - No, we haven't switched, and we might not. ;P

Installing Erlang and RabbitMQ

The RabbitMQ installation page describes how to install the components. It's pretty short and simple. It amounts to first installing Erlang, then installing RabbitMQ. The default settings should suffice.

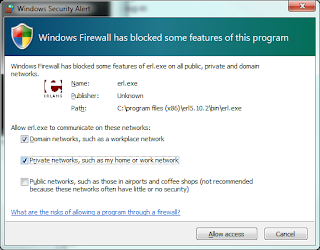

The installers should take care of any firewall settings. If they don't, you may see a dialog similar to the one below. Go ahead and add the rules for Erlang.

The RabbitMQ management plug-in uses a different port: 15672. If you want to access the management page remotely, you'll need to add the appropriate firewall rules for it.

Copy The Cookie

Once installation is complete, you'll want to copy the .erlang.cookie file to your RabbitMQ profile directory. Doing this now will avoid some headaches later in life, especially if you decide to cluster nodes. It should be located in your %systemroot% directory. Copy it to your %appdata%/RabbitMQ directory. It is important that these files be identical.

Batch Files and Initial Status

The installer should have added items to your Start menu. They'll be located in Start->All Programs->RabbitMQ Server. Open the RabbitMQ Command Prompt (sbin dir) shortcut.

This will open a command prompt. A number of operations can be performed with this console window: starting/stopping the broker, enabling plug-ins, etc. Checking the broker's status can be done by entering 'rabbitmqctl status' at the prompt.

Enabling the Management Plug-in

The next step to perform is to enable the management plug-in. This plug-in provides a web interface for controlling the broker. It can be used to manage users, exchanges, etc. The management plug-in can be issuing the commands illustrated in the following image. Also note that in most cases, I've found it necessary to restart the RabbitMQ service from the services control panel.

The commands are (in order):

Using the Management Web Page

The plug-in docs describe many of the features available. We're going to use the UI to create a test user and a virtual host. Later we'll use it to see the broker at work, create a queue, and alter some bindings.

You can access the management UI by opening a browser and navigating to http://localhost:15672. You'll be prompted with the login screen. The default username and password are guest/guest. There are a number of tabs available after logging in. The first is the Overview:

First we'll create a virtual host for testing. Virtual hosts are managed in the Virtual Host tab of the Admin screen. We'll create one called Sandbox:

Users are managed in the Users tab of the Admin screen. Expand the 'Add a user' accordion and enter a name along with a password. For this example, I used SandboxUser as the username, and sandbox as the password:

Users must have permission to access virtual hosts. There are a few ways to do this, but we'll use the Virtual Hosts tab of the Admin screen. Click on the Sandbox virtual host and expand the 'Permissions' accordion. Select the user name in the 'User' drop down and click the 'Set Permission' button. Do this for both the SandboxUser and guest users:

The Sample Apps

The source code in this article can be found on GitHub. The solution is divided into three projects: Publisher, Subscriber, and Messages. The name of each project implies what it does. I'm bypassing use of an IoC or test projects for simplicity. Hopefully, I'll get some time in a future post to illustrate how one can mix an IoC container and do some TDD...

Here's what it looks like:

The publisher sends messages to the broker. The subscriber listens to the broker for a message. The message contract is defined in the Messages project. It is referenced in both the Publisher and Subscriber.

The publisher and subscriber projects both reference a library called EasyNetQ. EasyNetQ provides a fairly simple API for interacting with a RabbitMQ broker. At the heart of this is the IBus interface. IBus is used in publishing and subscribing to messages. Our apps use a BusFactory class to create an instance of this interface:

The BusFactory class uses a connection string defined in the app.config:

The heart of our demo is in the DemoPublisher. This class illustrates the heart of the publishing operation. A message is created, a publishing channel is opened, and the message is published.

At the other end of the demo is the subscriber. The DemoSubscriber.ListenForAMessage() method uses an instance of IBus to notify the broker that it is listening for messages of type 'ExampleMessage'. When a message of that type is received, it writes the greeting to the console, and marks the done flag as true.

Running the Publisher

When the publisher is run by itself for the first time, EasyNetQ will create an exchange. However the message sent to the broker seems to disappear. This is because there is no queue defined to receive it. We can create a test queue to catch the message.

First we create a queue:

Then we bind the exchange to the queue. This can be done either in the queue detail view, or in the exchange detail view. This example shows it being done in the queue detail view. This view is reached by clicking on the queue name, after it's been created. It's important to note that the name must match exactly. It's okay to leave the 'Routing key' and 'Arguments' sections blank.

When the publisher sends a message to the broker, that message is directed to the queue, TestQueue.

Running the Subscriber

When EasyNetQ makes a subscription, it creates the queue and automatically binds it to the exchange. We can see the results both in the console output, and in the queues on the management page:

Run the Subscriber First

In creating the subscriptions and publishing the message, EasyNetQ handles the creation of the exchanges and queues for you. Be default, RabbitMQ will discard messages for which there is no destination queue. Thus, you will want to ensure that the subscribers are initialized, before you publish a message.

Wrapping It Up

That's about it for creating a simple demo for RabbitMQ. There are a lot of topics which we haven't covered: clustering, federations, high availability to name a few. Although a little dated, Manning's RabbitMQ in Action: Distributed Messenging for Everyone is worth a read. The documentation for EasyNetQ and MassTransit are other good references.

The RabbitMQ installation page describes how to install the components. It's pretty short and simple. It amounts to first installing Erlang, then installing RabbitMQ. The default settings should suffice.

The installers should take care of any firewall settings. If they don't, you may see a dialog similar to the one below. Go ahead and add the rules for Erlang.

The RabbitMQ management plug-in uses a different port: 15672. If you want to access the management page remotely, you'll need to add the appropriate firewall rules for it.

Copy The Cookie

Once installation is complete, you'll want to copy the .erlang.cookie file to your RabbitMQ profile directory. Doing this now will avoid some headaches later in life, especially if you decide to cluster nodes. It should be located in your %systemroot% directory. Copy it to your %appdata%/RabbitMQ directory. It is important that these files be identical.

Batch Files and Initial Status

The installer should have added items to your Start menu. They'll be located in Start->All Programs->RabbitMQ Server. Open the RabbitMQ Command Prompt (sbin dir) shortcut.

This will open a command prompt. A number of operations can be performed with this console window: starting/stopping the broker, enabling plug-ins, etc. Checking the broker's status can be done by entering 'rabbitmqctl status' at the prompt.

Enabling the Management Plug-in

The next step to perform is to enable the management plug-in. This plug-in provides a web interface for controlling the broker. It can be used to manage users, exchanges, etc. The management plug-in can be issuing the commands illustrated in the following image. Also note that in most cases, I've found it necessary to restart the RabbitMQ service from the services control panel.

The commands are (in order):

- rabbitmqctl stop_app

- rabbitmq-plugins enable rabbitmq_management

- rabbitmqctl start_app

Using the Management Web Page

The plug-in docs describe many of the features available. We're going to use the UI to create a test user and a virtual host. Later we'll use it to see the broker at work, create a queue, and alter some bindings.

You can access the management UI by opening a browser and navigating to http://localhost:15672. You'll be prompted with the login screen. The default username and password are guest/guest. There are a number of tabs available after logging in. The first is the Overview:

First we'll create a virtual host for testing. Virtual hosts are managed in the Virtual Host tab of the Admin screen. We'll create one called Sandbox:

Users are managed in the Users tab of the Admin screen. Expand the 'Add a user' accordion and enter a name along with a password. For this example, I used SandboxUser as the username, and sandbox as the password:

Users must have permission to access virtual hosts. There are a few ways to do this, but we'll use the Virtual Hosts tab of the Admin screen. Click on the Sandbox virtual host and expand the 'Permissions' accordion. Select the user name in the 'User' drop down and click the 'Set Permission' button. Do this for both the SandboxUser and guest users:

The Sample Apps

The source code in this article can be found on GitHub. The solution is divided into three projects: Publisher, Subscriber, and Messages. The name of each project implies what it does. I'm bypassing use of an IoC or test projects for simplicity. Hopefully, I'll get some time in a future post to illustrate how one can mix an IoC container and do some TDD...

Here's what it looks like:

The publisher sends messages to the broker. The subscriber listens to the broker for a message. The message contract is defined in the Messages project. It is referenced in both the Publisher and Subscriber.

The publisher and subscriber projects both reference a library called EasyNetQ. EasyNetQ provides a fairly simple API for interacting with a RabbitMQ broker. At the heart of this is the IBus interface. IBus is used in publishing and subscribing to messages. Our apps use a BusFactory class to create an instance of this interface:

public static class BusFactory

{

public static IBus Create()

{

var settings = ConfigurationManager.ConnectionStrings["rabbit"];

if (settings == null || string.IsNullOrEmpty(settings.ConnectionString))

throw new InvalidOperationException("Missing connection string.");

return RabbitHutch.CreateBus(settings.ConnectionString);

}

}

The BusFactory class uses a connection string defined in the app.config:

<connectionStrings>

<add name="rabbit" connectionString="host=localhost;virtualHost=Sandbox;username=SandboxUser;password=sandbox;prefetchcount=1;" />

</connectionStrings>

The heart of our demo is in the DemoPublisher. This class illustrates the heart of the publishing operation. A message is created, a publishing channel is opened, and the message is published.

public class DemoPublisher

{

private readonly IBus bus;

public DemoPublisher(IBus bus)

{

this.bus = bus;

}

public void Publish()

{

var message = new ExampleMessage { Greeting = "Hello, world!" };

using (var channel = bus.OpenPublishChannel())

channel.Publish(message);

}

}

At the other end of the demo is the subscriber. The DemoSubscriber.ListenForAMessage() method uses an instance of IBus to notify the broker that it is listening for messages of type 'ExampleMessage'. When a message of that type is received, it writes the greeting to the console, and marks the done flag as true.

public class DemoSubscriber

{

private readonly IBus bus;

public DemoSubscriber(IBus bus)

{

this.bus = bus;

}

public void ListenForAMessage()

{

var done = false;

bus.Subscribe<ExampleMessage>("subscriber", message =>

{

Console.WriteLine(message.Greeting);

done = true;

});

SpinWait.SpinUntil(() => done);

}

}

Running the Publisher

When the publisher is run by itself for the first time, EasyNetQ will create an exchange. However the message sent to the broker seems to disappear. This is because there is no queue defined to receive it. We can create a test queue to catch the message.

First we create a queue:

Then we bind the exchange to the queue. This can be done either in the queue detail view, or in the exchange detail view. This example shows it being done in the queue detail view. This view is reached by clicking on the queue name, after it's been created. It's important to note that the name must match exactly. It's okay to leave the 'Routing key' and 'Arguments' sections blank.

When the publisher sends a message to the broker, that message is directed to the queue, TestQueue.

Running the Subscriber

When EasyNetQ makes a subscription, it creates the queue and automatically binds it to the exchange. We can see the results both in the console output, and in the queues on the management page:

In creating the subscriptions and publishing the message, EasyNetQ handles the creation of the exchanges and queues for you. Be default, RabbitMQ will discard messages for which there is no destination queue. Thus, you will want to ensure that the subscribers are initialized, before you publish a message.

Wrapping It Up

That's about it for creating a simple demo for RabbitMQ. There are a lot of topics which we haven't covered: clustering, federations, high availability to name a few. Although a little dated, Manning's RabbitMQ in Action: Distributed Messenging for Everyone is worth a read. The documentation for EasyNetQ and MassTransit are other good references.

Monday, March 11, 2013

Building a Service App: Logging

Preface

In the last post, we saw how to get started hooking things up with Windsor, TopShelf, and NLog. This post will take a look at logging with Windsor and NLog. We'll also look at using a utility called Log2Console to view our log messages as they are generated.

The Packages

This post looks at using the following NuGet packages:

Adding the NLog.Schema package to a project adds a schema file which can aid in editing the NLog.config file. The NLog wiki is pretty good about documenting the different options. In short, the config is broken into two sections:

In the last post, we saw how to get started hooking things up with Windsor, TopShelf, and NLog. This post will take a look at logging with Windsor and NLog. We'll also look at using a utility called Log2Console to view our log messages as they are generated.

The Packages

This post looks at using the following NuGet packages:

Installing Castle.Windsor-NLog ensures that all the appropriate logging dependencies are included in our application. NLog.Schema adds some Intellisense to help with the NLog.config file.

Initializing the Logger

Windsor's behavior can be extended through the use of facilities. A handy one, available out of the box, is the Logging Facility. We'll be setting up to use NLog as our framework. Windsor is configured to use NLog by adding the appropriate facility:

It is possible to specify various options: config file location, log target, etc. We'll be using the default NLog beahvior which looks for a file called NLog.config in the root directory of the assembly.

Configuring the Logger

Windsor's behavior can be extended through the use of facilities. A handy one, available out of the box, is the Logging Facility. We'll be setting up to use NLog as our framework. Windsor is configured to use NLog by adding the appropriate facility:

container.AddFacility<LoggingFacility>(facility => facility.LogUsing(LoggerImplementation.NLog))

It is possible to specify various options: config file location, log target, etc. We'll be using the default NLog beahvior which looks for a file called NLog.config in the root directory of the assembly.

Configuring the Logger

Adding the NLog.Schema package to a project adds a schema file which can aid in editing the NLog.config file. The NLog wiki is pretty good about documenting the different options. In short, the config is broken into two sections:

- Targets. These describe the formatting, content, and destination of the log message.

- Rules. These describe what gets logged, and to what target the message is sent.

A nifty trick which can be used while you're working on things is to use NLog in conjunction with a utility called Log2Console. To use Log2Console to view log message, you will need to add a receiver. This will allow it to receive messages. First click the Receivers button, then click the Add... button. Add a UDP receiver, leaving the default settings.

After adding the receiver, the NLog.config file will need to be updated. A Chainsaw target will need to be added. There will also need to be the corresponding rule created:

Once it's setup, Log2Console should catch the messages sent to NLog by your application.

Conclusion

This showed a quick and dirty way of viewing log messages in real time. It's something that could be useful while working on applications. Next up will be a look at setting up WCF endpoint in our TopShelf app.

Thursday, March 7, 2013

Building a Service App: Adding Windsor and TopShelf

Preface

In the last post we started with a basic exception handling clause in our console application. This time, we'll be dropping in TopShelf, Windsor, and some Logging bits. The example code is available on GitHub.

The Service Class

The service class will be very simple to start. All it will do is write a couple messages to our logger:

To make this class available elsewhere, we'll need to register it with our IoC container, Windsor. There are a lot of different ways to register components. This time, we'll use an installer. Installers are a convenient way to segregate the registration of your application's components.

We'll need to add other components to this as things go along. But for now, it's a good start.

Updating Program.Main()

Now that we have a service class and an installer we can modify the Program.Main() method.

The ContainerFactory() method creates a new instance of the container. It then configures the container with the appropriate services.

The next method, RunTheHostFactory(), covers the bulk of the TopShelf implementation. It uses the TopShelf HostFactory static class to perform the actual work.

TopShelf's HostFactory use our container to instantiate our service. It also cleans up when the service is stopped. This is the basic TopShelf implementation. Calls to .WhenPaused(), and .WhenContinued() can be added to the HostFactory to handle when the service is paused and resumed in the service control panel.

First Run

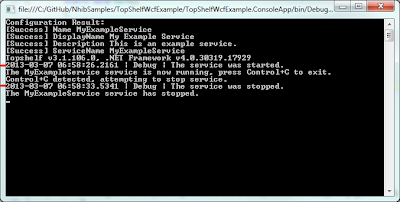

At this point it's possible to build and install our service. A handy thing about TopShelf is that it greatly simplifies using generic services. Running our service from the Visual Studio debugger shows us we have some basic functionality (red tic marks indicate the logger entries made by the service class):

There are some other basic things we can do with a TopShelf-based application. We can run the executable as any other console app can be run:

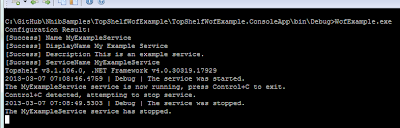

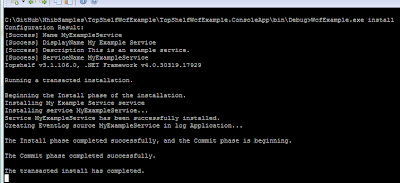

Installing the service is pretty done by adding a parameter, install, to the command:

Once a service is installed, it can be started and stopped:

Finally, the service may be uninstalled:

Conclusion

We covered how to get started with TopShelf. We also looked at setting up an IoC to work with a TopShelf-based application. Next we'll look a little more at logging, and how we can use a component to configure our service.

In the last post we started with a basic exception handling clause in our console application. This time, we'll be dropping in TopShelf, Windsor, and some Logging bits. The example code is available on GitHub.

The Service Class

The service class will be very simple to start. All it will do is write a couple messages to our logger:

public interface IExampleService

{

void Start();

void Stop();

}

public class ExampleService : IExampleService

{

private readonly ILogger logger;

public ExampleService(ILogger logger)

{

this.logger = logger;

}

public void Start()

{

logger.Debug("The service was started.");

}

public void Stop()

{

logger.Debug("The service was stopped.");

}

}

To make this class available elsewhere, we'll need to register it with our IoC container, Windsor. There are a lot of different ways to register components. This time, we'll use an installer. Installers are a convenient way to segregate the registration of your application's components.

public class MyInstaller : IWindsorInstaller

{

public void Install(IWindsorContainer container, IConfigurationStore store)

{

container.Register(

Component.For<IExampleService>().ImplementedBy<ExampleService>()

);

}

}

We'll need to add other components to this as things go along. But for now, it's a good start.

Updating Program.Main()

Now that we have a service class and an installer we can modify the Program.Main() method.

static void Main()

{

try

{

var container = ContainerFactory();

RunTheHostFactory(container);

}

catch (Exception exception)

{

var assemblyName = typeof(Program).AssemblyQualifiedName;

if (!EventLog.SourceExists(assemblyName))

EventLog.CreateEventSource(assemblyName, "Application");

var log = new EventLog { Source = assemblyName };

log.WriteEntry(string.Format("{0}", exception), EventLogEntryType.Error);

}

}

The ContainerFactory() method creates a new instance of the container. It then configures the container with the appropriate services.

private static IWindsorContainer ContainerFactory()

{

var container = new WindsorContainer()

.Install(Configuration.FromAppConfig())

.Install(FromAssembly.This());

return container;

}

The next method, RunTheHostFactory(), covers the bulk of the TopShelf implementation. It uses the TopShelf HostFactory static class to perform the actual work.

private static void RunTheHostFactory(IWindsorContainer container)

{

HostFactory.Run(config =>

{

config.Service<IExampleService>(settings =>

{

// use this to instantiate the service

settings.ConstructUsing(hostSettings => container.Resolve<IExampleService>());

settings.WhenStarted(service => service.Start());

settings.WhenStopped(service =>

{

// stop and release the service, then dispose the container.

service.Stop();

container.Release(service);

container.Dispose();

});

});

config.RunAsLocalSystem();

config.SetDescription("This is an example service.");

config.SetDisplayName("My Example Service");

config.SetServiceName("MyExampleService");

});

}

TopShelf's HostFactory use our container to instantiate our service. It also cleans up when the service is stopped. This is the basic TopShelf implementation. Calls to .WhenPaused(), and .WhenContinued() can be added to the HostFactory to handle when the service is paused and resumed in the service control panel.

First Run

At this point it's possible to build and install our service. A handy thing about TopShelf is that it greatly simplifies using generic services. Running our service from the Visual Studio debugger shows us we have some basic functionality (red tic marks indicate the logger entries made by the service class):

There are some other basic things we can do with a TopShelf-based application. We can run the executable as any other console app can be run:

Installing the service is pretty done by adding a parameter, install, to the command:

Once a service is installed, it can be started and stopped:

Finally, the service may be uninstalled:

Conclusion

We covered how to get started with TopShelf. We also looked at setting up an IoC to work with a TopShelf-based application. Next we'll look a little more at logging, and how we can use a component to configure our service.

Tuesday, March 5, 2013

Building a Service App: Intro & Unhandled Exceptions

Introduction

We do a lot of service applications where I work. Most of them are self-hosted. That means a lot of console-style apps. This post is to document some of the stuff we're doing, so we have something of a common template.

We'll be using a few tools/technologies:

The example code will be hosted on GitHub.

We'll get started with a basic unhandled exception handler. This will give us a way to capture the error when the application won't even start, and hasn't had time to initialize the logging framework.

Program.Main() and Unhandled Exceptions

When everything fails, including your logging mechanism, it's nice to know what happened. There's just a ton of things that can and do go wrong. One way to help catch these errors is to log to the an event log.

What we've done is place a generic exception handler in the Main() method. It catches generic exceptions, logging them all to the event log. This catches anything that hasn't been caught; your basic unhandled exception handler. Now we have a way to see what happened...

Next...

There you have it. An easy way to capture exceptions when the worst happens. Next up, we'll take a look at dependency injection with Windsor.

We do a lot of service applications where I work. Most of them are self-hosted. That means a lot of console-style apps. This post is to document some of the stuff we're doing, so we have something of a common template.

We'll be using a few tools/technologies:

The example code will be hosted on GitHub.

We'll get started with a basic unhandled exception handler. This will give us a way to capture the error when the application won't even start, and hasn't had time to initialize the logging framework.

Program.Main() and Unhandled Exceptions

When everything fails, including your logging mechanism, it's nice to know what happened. There's just a ton of things that can and do go wrong. One way to help catch these errors is to log to the an event log.

public class Program

{

static void Main()

{

try

{

// do something

}

catch (Exception exception)

{

var assemblyName = typeof(Program).AssemblyQualifiedName;

if (!EventLog.SourceExists(assemblyName))

EventLog.CreateEventSource(assemblyName, "Application");

var log = new EventLog { Source = assemblyName };

log.WriteEntry(string.Format("{0}", exception), EventLogEntryType.Error);

}

}

}

What we've done is place a generic exception handler in the Main() method. It catches generic exceptions, logging them all to the event log. This catches anything that hasn't been caught; your basic unhandled exception handler. Now we have a way to see what happened...

|

| The EventLog |

|

| The captured error message. |

Next...

There you have it. An easy way to capture exceptions when the worst happens. Next up, we'll take a look at dependency injection with Windsor.

Friday, February 22, 2013

PowerShell and TopShelf

Preface

A large part of our development involves generic services. TopShelf simplifies the installation and operation of the services we've been creating. When dealing with a number of services, it can still be a pain to install and start each individual service. To ease that pain point, we created a simple PowerShell script to help.

Deploying the Services

One project involved creating a set of services which were passing messages via RabbitMQ. Setting up a server (test or otherwise) involved dropping 3-6 services in a directory, configuring them, and starting them up. The deployment would be similar to the following:

This script uses Get-ChildItem to look through all the directories in the current directory. When it finds the appropriate .exe file, it executes the TopShelf command on it. This example script uses the name 'topshelfhost.exe' to as the TopShelf enabled app.

Using the script is pretty easy. Just pass it one of the TopShelf commands (start, stop, install, uninstall), and it handles the rest. Here's an example...

Summary

Not the cleanest of scripts, but an example of you PowerShell can be used to ease some other tasks.

A large part of our development involves generic services. TopShelf simplifies the installation and operation of the services we've been creating. When dealing with a number of services, it can still be a pain to install and start each individual service. To ease that pain point, we created a simple PowerShell script to help.

Deploying the Services

One project involved creating a set of services which were passing messages via RabbitMQ. Setting up a server (test or otherwise) involved dropping 3-6 services in a directory, configuring them, and starting them up. The deployment would be similar to the following:

/Parent Directory

TopShelfHelper.ps1

/ServiceA Directory

topshelfhost.exe

... (Other Files)

/ServiceB Directory

topshelfhost.exe

... (Other Files)

The Script

The script is available on GitHub. Here it is for reference:

param(

[parameter(mandatory=$true)]

[validateset("start","stop","uninstall", "install")]

$command

)

$ErrorActionPreference = "Stop"

push-Location

try

{

get-ChildItem -Path $pwd -Recurse |

where-Object {$_.name -eq 'topshelfhost.exe'} |

select-Object fullname |

foreach-object {& $_.fullname $command}

"INFO: Operation completed successfully."

}

catch

{

$Error[0]

"ERROR: An error occurred performing the operation."

}

finally

{

pop-Location

}

This script uses Get-ChildItem to look through all the directories in the current directory. When it finds the appropriate .exe file, it executes the TopShelf command on it. This example script uses the name 'topshelfhost.exe' to as the TopShelf enabled app.

Using the script is pretty easy. Just pass it one of the TopShelf commands (start, stop, install, uninstall), and it handles the rest. Here's an example...

Summary

Not the cleanest of scripts, but an example of you PowerShell can be used to ease some other tasks.

Subscribe to:

Comments (Atom)